Top: The comparison between the standard updating process of Image Copy Detection (ICD) and the proposed in-context ICD. Unlike the standard updating approach, our in-context ICD eliminates the need for fine-tuning, making it more efficient. Bottom: AnyPattern is the first large-scale pattern dataset, featuring 90 base and 10 novel patterns. Using 90 base patterns, we generate a training dataset containing 10 million images.

Abstract

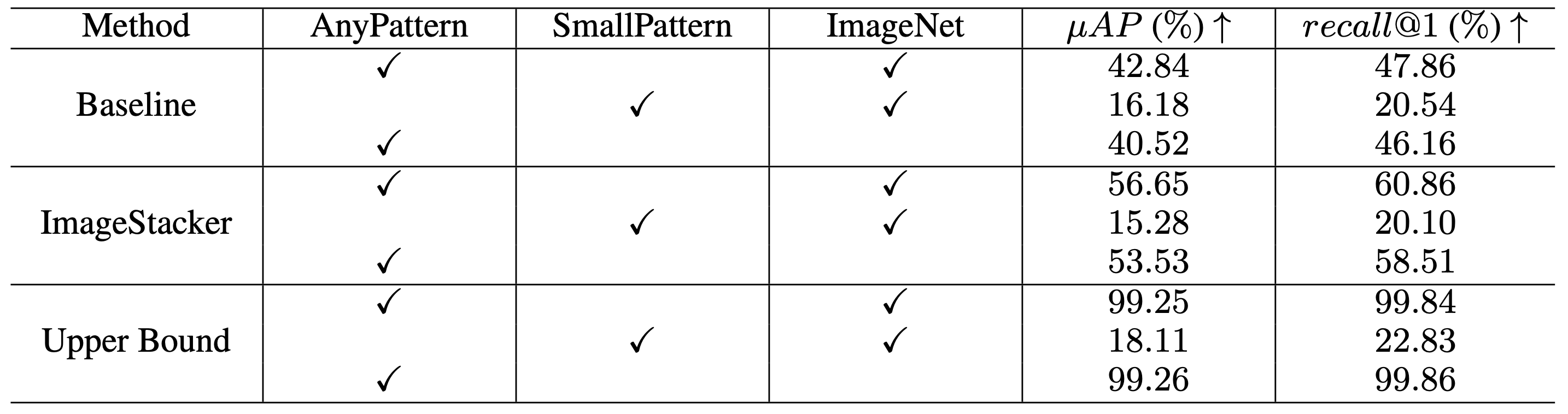

This paper explores in-context learning for image copy detection (ICD), i.e., prompting an ICD model to identify replicated images with new tampering patterns without the need for additional training. The prompts (or the contexts) are from a small set of image-replica pairs that reflect the new patterns and are used at inference time. Such in-context ICD has good realistic value, because it requires no fine-tuning and thus facilitates fast reaction against the emergence of unseen patterns. To accommodate the “seen → unseen” generalization scenario, we construct the first large-scale pattern dataset named AnyPattern, which has the largest number of tamper patterns (90 for training and 10 for testing) among all the existing ones. We benchmark AnyPattern with popular ICD methods and reveal that existing methods barely generalize to novel tamper patterns. We further propose a simple in-context ICD method named ImageStacker. ImageStacker learns to select the most representative image-replica pairs and employs them as the pattern prompts in a stacking manner (rather than the popular concatenation manner). Experimental results show (1) training with our large-scale dataset substantially benefits pattern generalization (+26.66% μAP), (2) the proposed ImageStacker facilitates effective in-context ICD (another round of +16.75% μAP), and (3) AnyPattern enables in-context ICD, i.e. without such a large-scale dataset, in-context learning does not emerge even with our ImageStacker. Beyond the ICD task, we also demonstrate how AnyPattern can benefit artists, i.e., the pattern retrieval method trained on AnyPattern can be generalized to identify style mimicry by text-to-image models.

In-context Image Copy Detection

The illustration for our in-context Image Copy Detection (ICD) with AnyPattern. In-context ICD necessitates a well-trained ICD model to be prompted to novel patterns with the assistance of a few image-replica pairs and without any fine-tuning process. In realistic scenarios, this setup is highly practical as it provides a feasible solution for a deployed ICD system faced with unseen patterns.

Method Overview

The proposed ImageStacker includes: (a) prompt selection fetches the most representative image-replica pair from the whole pool for a given query, and (b) prompting design stacks the selected image-replica pair onto a query along the channel dimension, and thus the image-replica pair conditions the feed-forward process. In (c), we show how to unify prompt selection and prompting design into one vision transformer.

AnyPattern Enables In-context ICD

In-context learning does not emerge when using SmallPattern and ImageNet-pretrained models: comparing against baseline with 16.18% in μAP, ImageStacker only achieves 15.28% in μAP.

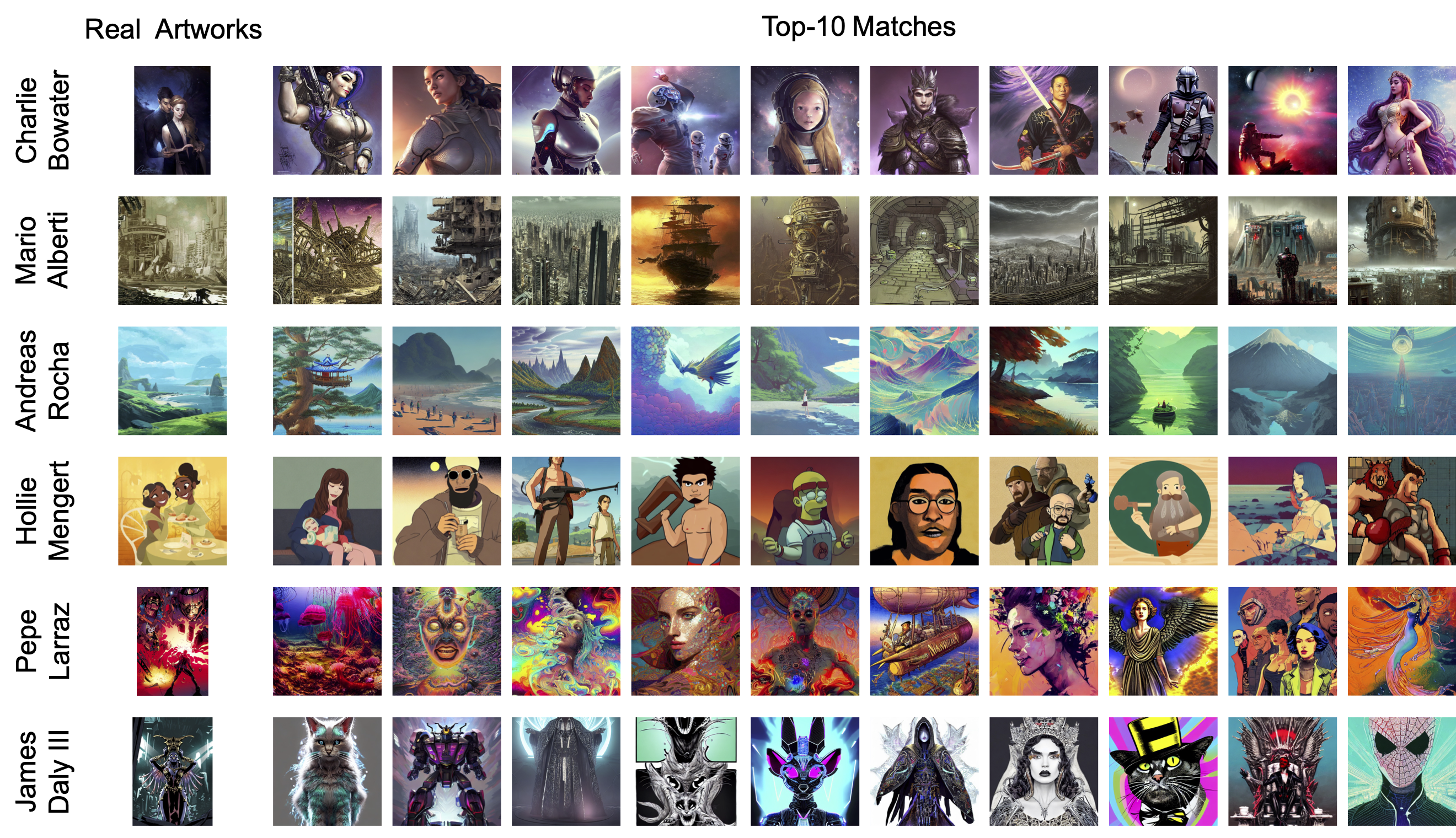

AnyPattern Helps Artists

The text-to-image model can be used to mimic the style of artwork with little cost, and this threatens the livelihoods and creative rights of artists. To help them protect their work, we treat an artist’s ‘style’ as a ‘pattern’ and generalize the trained pattern retrieval method to identify generated images with style mimicry.

Paper

AnyPattern: Towards In-context Image Copy Detection

Wenhao Wang, Yifan Sun, Zhentao Tan, and Yi Yang

Arxiv 2024

@article{wang2025AnyPattern,

title={AnyPattern: Towards In-context Image Copy Detection},

author={Wang, Wenhao and Sun, Yifan and Tan, Zhentao and Yang, Yi},

booktitle={International Journal of Computer Vision},

year={2025},

}Contact

If you have any questions, feel free to contact Wenhao Wang (wangwenhao0716@gmail.com).

Acknowledgements

This template was originally made by Phillip Isola and Richard Zhang for a colorful project, and inherits the modifications made by Jason Zhang and Shangzhe Wu. The code can be found here.